With the rise of wearable personal electronics such as the smartwatch, more and more users have a capable edge computing device and sensing suite at their fingertips. Despite this, few projects have investigated how to perform continuous, power-efficient Human Activity Recognition (HAR) on the watch beyond traditional fitness tracking/pedometers. In SAMoSA, Mollyn et al. investigate the efficacy of streaming inputs from a smartwatch microphone and IMU to perform 26-class HAR on a laptop. The following report aims to adapt the foundation built by Mollyn et al. to enable an abridged adaptation of their model to be trained on Edge Impulse and to run independently on a wrist-worn Arduino Nano 33 BLE Sense without assistance from an external server.

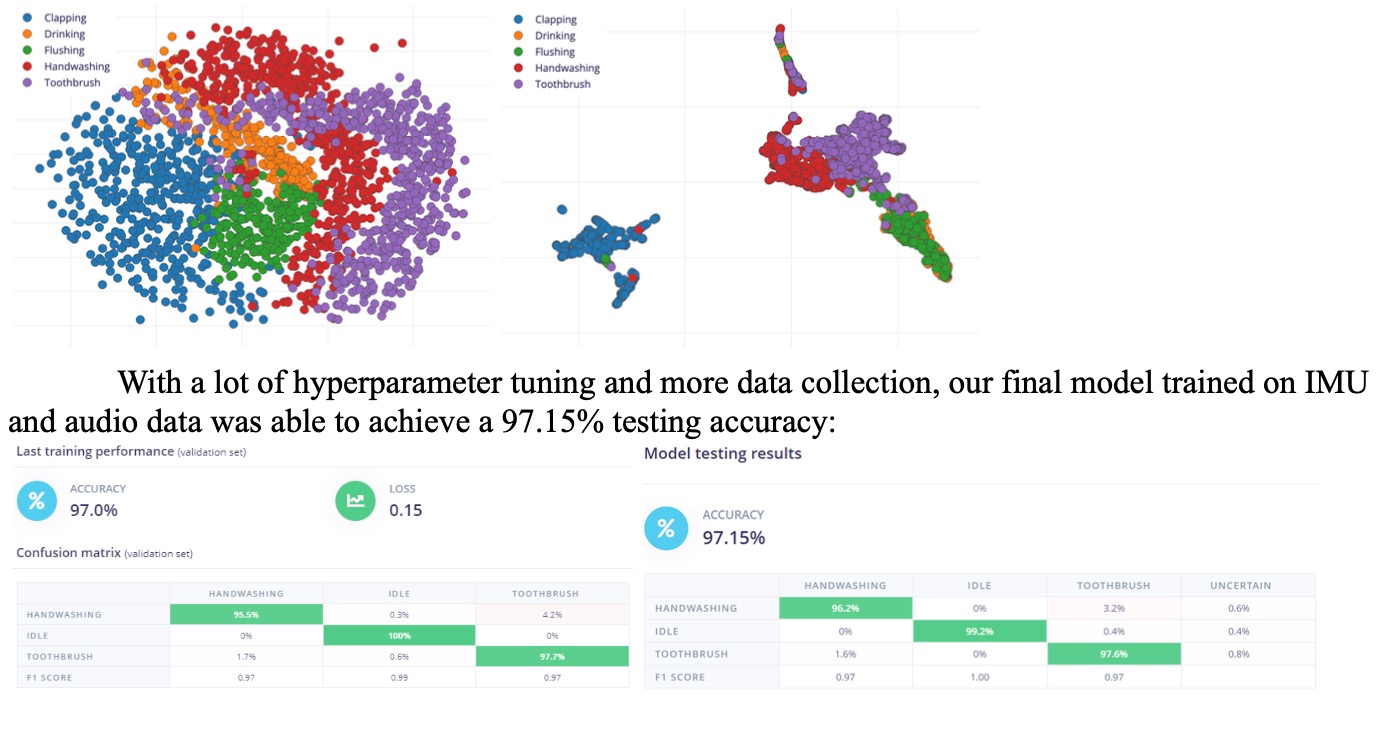

As part of our adaptation, we chose to rescope the classes that could be detected in-device. The classes we focused on during development were chosen both for their available data as well as how practical they seemed in a real-world application. Handwashing and toothbrushing, for example, are tasks that are associated with some “ideal” minimum duration and would be well suited for an application focused on nudging or tracking a user’s habits. We also saw activities like drinking as being potentially relevant to health-focused tracking applications. However, based on initial testing, low-movement activities such as Drinking and Flushing caused greater confusion between classes due to overreliance on audio which is a feature whose preprocessing doesn’t seem perfect quite yet. Therefore, we made the final decision to demo our project with the idle, brushing, and hand-washing classes.

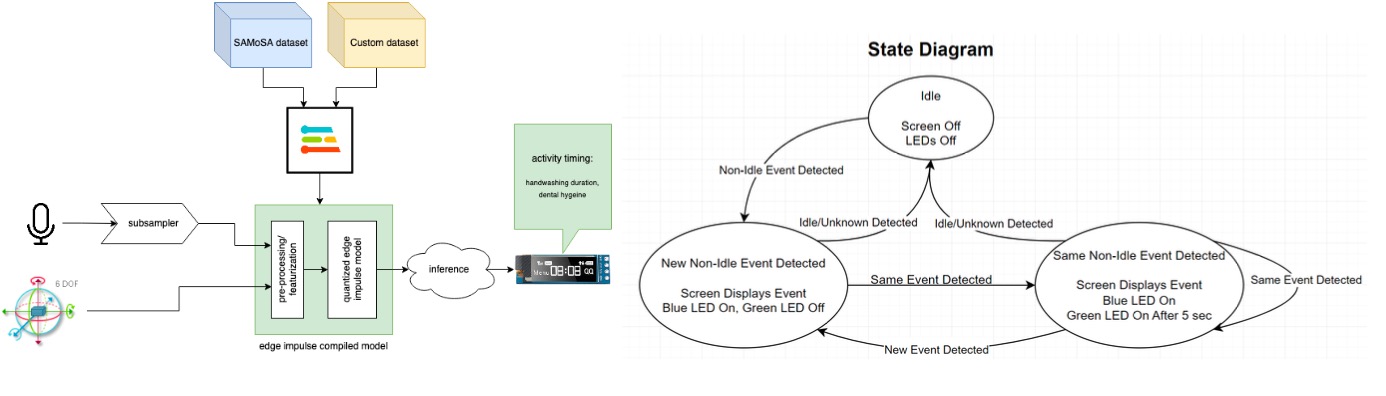

Below is our system block diagram and our finished watch with a screen for displaying the action detected and the duration. The blue LED indicates when an action is detected, and the green LED indicates when an action’s duration has reached its threshold timing.